Does RAG in Workflows Have to Be This Hard?

When "low-code" still requires too much code

Reading n8n’s documentation on setting up RAG is exhausting just to scroll through.

n8n is a solid automation tool. But their approach to RAG feels like being handed IKEA furniture with instructions in Swedish when all you wanted was a chair to sit on.

Here’s What n8n Asks You To Do

Step 1: Add nodes to fetch your source data

Step 2: Insert a Vector Store node (choose which one!)

Step 3: Select an embedding model (wait, which one? Check the FAQ!)

Step 4: Add a Default Data Loader node

Step 5: Choose your chunking strategy:

Character Text Splitter?

Recursive Character Text Splitter? (recommended, apparently)

Token Text Splitter?

Step 6: Configure chunk size (200-500 tokens? Larger? Who knows!)

Step 7: Set overlap parameters

Step 8: Add metadata (optional but recommended)

Step 9: Create a separate workflow for querying

Step 10: Configure the agent

Step 11: Add the vector store as a tool with a description

Step 12: Set retrieval limits and enable metadata

Step 13: Make sure you’re using the SAME embedding model you used for ingestion

Oh, and if you want to get fancy? You’ll need to understand:

The difference between text-embedding-ada-002 and text-embedding-3-large

When to use Markdown splitting vs. Code Block splitting

How to add contextual summaries to chunks

Sparse vector embeddings (if you’re feeling ambitious)

“But It’s Low-Code!”

Visual workflow builders are great. But calling this “low-code” is like calling a Tesla “low-maintenance” because you don’t have to change the oil.

Sure, you’re not writing Python scripts. But you ARE becoming a part-time data engineer who needs to understand:

Vector database architecture

Embedding model selection criteria

Text chunking strategies

Semantic search optimization

Agent tool configuration

Their own documentation literally says: “This again depends a lot on your data” when explaining chunk sizes. Translation: “Good luck, figure it out yourself.”

There’s a Better Way

Here’s the thing: RAG is powerful. It absolutely should be accessible to people who aren’t ML engineers. But “accessible” doesn’t mean “expose every technical parameter and let users guess.”

At Needle, we built RAG the way it should work: with Needle Collections, a fully-managed RAG service that handles all the complexity automatically.

What Needle Collections Handle Automatically

Needle Collections are fully-managed RAG infrastructure. Here’s what happens behind the scenes so you don’t have to think about it:

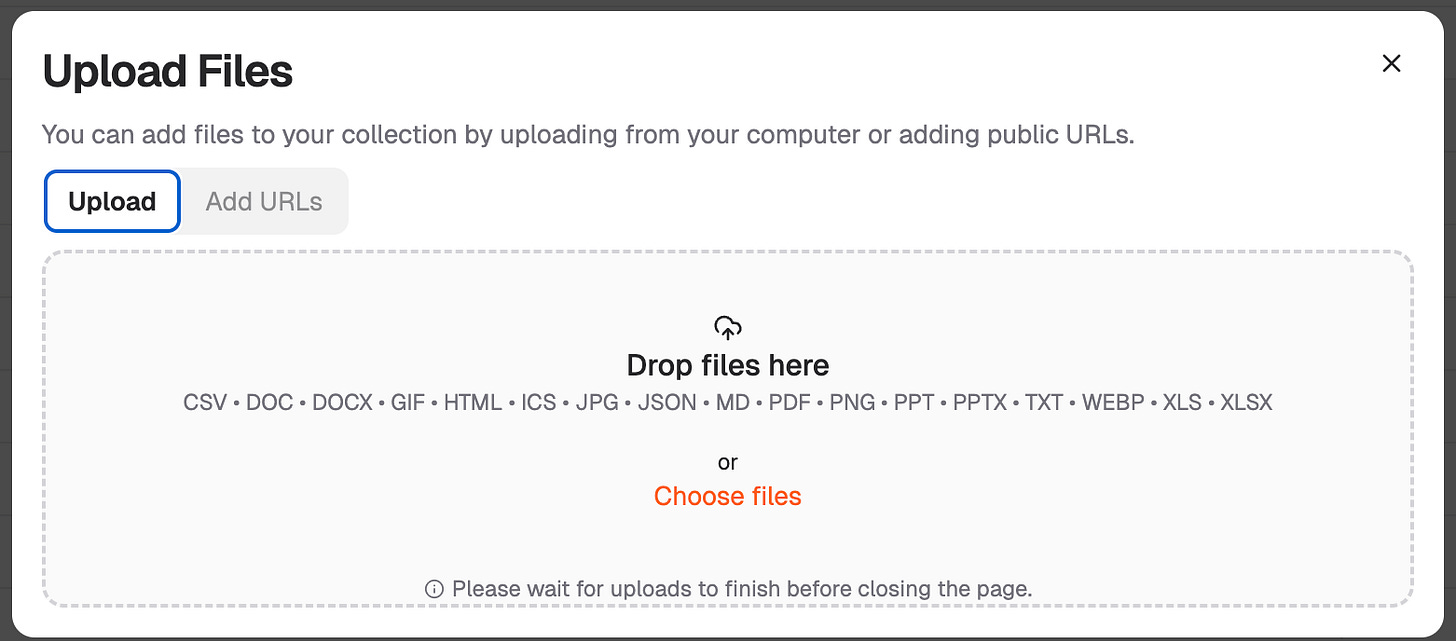

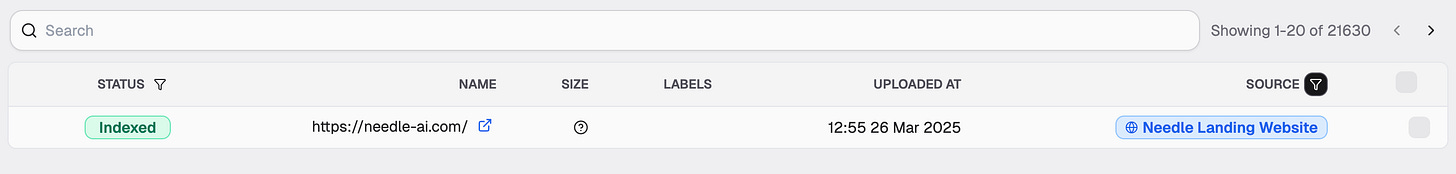

Document indexing - Upload files, paste URLs, or connect entire websites

Intelligent chunking - Optimized automatically for your content type

State-of-the-art embeddings - No model selection required

Vector database management - Production-ready, scalable infrastructure

Auto-reindexing - Via connectors for Google Drive, SharePoint, Slack, GitHub, Obsidian

Unlimited document storage - Not limited to 20-30 docs like ChatGPT Custom GPTs

Production-ready infrastructure - Managed, monitored, and maintained

No configuration. No tuning. No vector database expertise required.

2 Steps to Use Needle RAG

Step 1: Just Chat with Your Data

Create a Collection at needle.app/dashboard/collections

Upload your files (PDFs, docs, markdown, entire websites)

Ask questions in the chat interface

Done. Automatic indexing, automatic chunking, instant answers with citations.

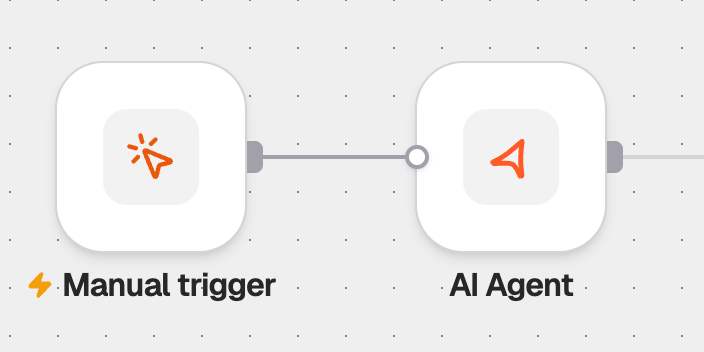

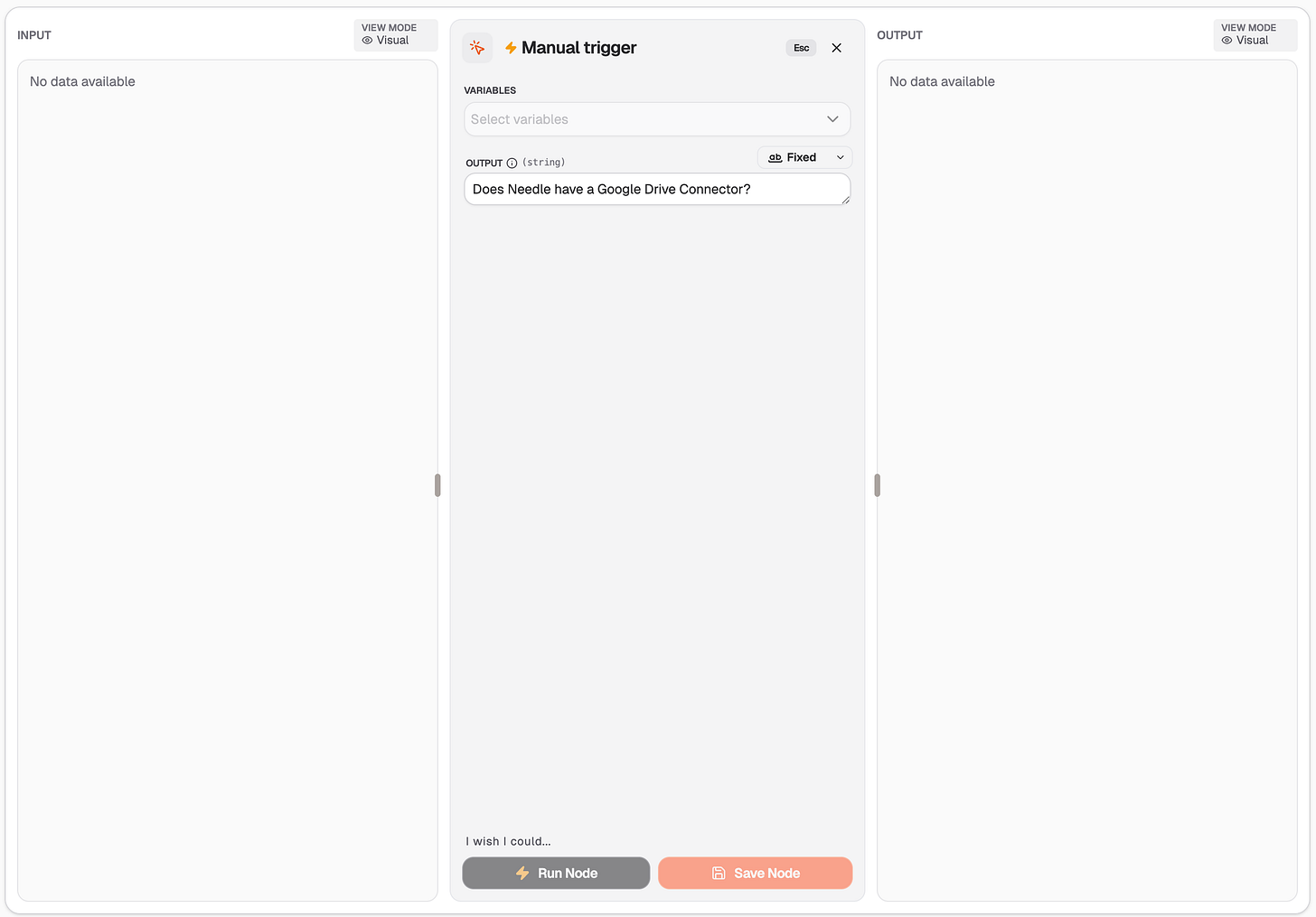

Step 2: Build a Workflow (2 Nodes)

Want to build custom RAG workflows? Here’s the entire setup:

Manual Trigger - Ask your question

AI Agent with

search_collectiontool - Returns the answer

That’s it. Check out the template.

No complex chains. No prompt engineering. No vector database management. Just 2 nodes.

When Complexity is a Feature, Not a Bug

For data science teams building highly customized RAG pipelines with specific chunking requirements for academic research, n8n’s approach gives control. Those teams WANT to tune those parameters.

But for:

Marketing teams that need to search through past campaigns

Sales teams wanting to query CRM and docs

Support teams looking to automate answers from knowledge bases

Developers building AI agents that need company knowledge

Ops people who just want AI to read Google Drive

...there’s no need for 13 steps and a PhD in embeddings. Collections are the answer.

The Real Question

n8n’s documentation proudly states: “RAG in n8n gives you complete control over every step.”

But here’s what they don’t ask: Do you WANT control over every step?

When you use Google, you don’t configure the PageRank algorithm. When you use Spotify, you don’t tune the recommendation engine parameters. You just use the damn thing.

RAG should work the same way. That’s why we built Needle Collections… production-ready RAG that just works.

Needle vs. n8n RAG: Side-by-Side

Try Needle’s Approach

No-code: Create Collection → Upload docs → Chat with your data

Build workflows: 2 nodes using our template

No chunking strategies. No vector databases. No embedding debates. Just production-ready RAG with automatic indexing and intelligent retrieval.

→ Get Started Free

→ View RAG Template

P.S. For anyone currently maintaining an n8n RAG workflow and debugging chunk overlap parameters at 11pm: “fully-managed” might be better than “fully-configurable.” Create a Collection, upload docs, and you’re done.

P.P.S. Yes, we have an n8n integration too. Use Needle’s MCP tools in your n8n AI Agent workflows if you want the best of both worlds: n8n’s automation + Needle’s managed RAG. See the docs.

Jan Heimes is Co-founder & vibe automation magician at Needle. When he’s not simplifying RAG he’s running LinkedIn automations and occasionally messaging himself by accident.